Posts Tagged ‘data’

Thursday, October 6th, 2011

from http://www.piriform.com/defraggler, October 2011

Defragmentation is a process that mitigates and reverses fragmentation. File system fragmentation occurs when the system is unable to maintain the data in a sequential order. During fragmentation data that is in memory is broken into pieces and dispersed throughout the system. One of the major problems that occur with fragmented data is an increase in the time it takes for the system to access files and programs.

Files on a drive are commonly known as blocks or clusters. These files are stored on a file system and are positioned one next to another. When files are added, removed, or changes the amount of space between these files is altered and the system no longer has enough room to hold the entire file. The system will then fill in these small spaces with portions of new files or files that have been adjusted, causing data to become separated from the rest of the file. This separation creates a delay in Seek Time and Rotational Latency. Seek Time is the time that it takes the head assembly to travel to the portion of the disk where the data will be read or written. The Rotational latency is the delay waiting for the rotation of the disk to bring the required disk sector under the head assembly.

The primary purpose of a defragmentation tool is to sort, organize, and compact similar blocks of data in order to reduce wasted space as well as to speed up access time. Windows operating systems come pre-installed with their own “Disk Defragmenter” utility. This utility is designed to increase access speeds by rearranging files contiguously. Many users feel that the pre-installed defragger isn’t the best on the market and have considered using alternative defragging tools. For the most part defragmentation software all does the same thing but it is very important to only use software from trusted developers. Using a poorly constructed defragmentation tool might cause problems like corrupted data, hard disk damage, and complete data loss. Free software can also lack the necessary support and may not be performing the function that it is meant for correctly.

If you still decide to use an alternative to the one provided with the Window’s OS, the paid options are probably the safest. This is because commercial software like Disk Keeper are put through testing to mitigate the negative impacts. There are also a few free alternatives that are pretty popular like Piriform Defraggler, Ultra Defrag, and Auslogics Disk Defrag.

Before making any decisions you should examine the strengths and weaknesses of free and commercial defragmenting software alternatives. After all, it might not be worth the hassle especially if the Window’s Disk Defragment utility is running adequately.

Have a Great Day!

Dustin

ComputerFitness.com

“> Providing Tech Support for Businesses in Maryland

Tags: data, defragment, defragmentation software, file, files, fragmentation, Software, system

Posted in Desktop - Workstation, PC, PC Maintenance | No Comments »

Thursday, July 21st, 2011

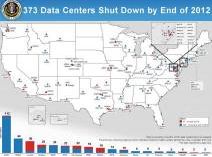

On Wednesday July 20, 2011 the Obama Administration announced their plan to close 800 of their 2,000 data centers over the next four years. The Federal Government initially scheduled the shutdown of 137 data centers by the end of this year. However, currently the process is ahead of schedule with already 81 sites closed so they now expect the closure of 195 facilities. In addition to the revised figure of 195 data centers for 2011 the White House also announced that nearly 200 complexes will be closed by the end of 2012, making the accumulative shutdowns just shy of 400 data centers.

from http://www.whitehouse.gov/blog/2011/07/20/shutting-down-duplicative-data-centers, July 2011

Since 1998 the United States Government has quadrupled their amount of data centers. Throughout the years the development of software that allows for multiple platform access has enabled the government to become more efficient and reduce their need for most data centers. It is reported that many of these sites operate only using 27% of their computing power even though taxpayers continue to pay for the entire infrastructure (land, facilities, equipment, cooling processes and special security elements).

According to the plans of the United States Government, the elimination of these data centers will help them be more efficient during this time of deficit. Over the next four years the shutdowns will allow for more real estate and a drastic reduction in unnecessary spending. The data centers that are marked for termination range in size with some occupying over 200,000 square feet and others residing on only 1,000 square feet of land. The geographic locations of these data centers also vary with locations scattered throughout 30 states. Due to the special equipment contained within these data centers the average power consumption can be 200 times more than regular office buildings and is enough to power 200 residential homes.

As a component of the President’s Campaign to Cut Waste, the closures will assist in locating misused tax dollars. The 800 sites scheduled for elimination further build on the Administration’s ongoing effort to create a more efficient, effective and accountable Government. According to Jeffrey Zients the Federal Chief Performance Officer and Deputy Director of the U.S. Office of Management and Budget“By shrinking our data center footprint we will save taxpayer dollars, cutting costs for infrastructure, real estate and energy. At the same time, moving to a more nimble 21st century model will strengthen our security and the ability to deliver services for less.”

This data center efficiency plan is aimed to save taxpayers over 3 billion dollars and greatly decrease environmental impact. Along with the plan to close these data centers the Government’s goal is to go in the direction of cloud services, first focusing on email and storage. The transition to cloud based computing provides a tremendous savings opportunity. According to an interview with Vivek Kundra, chief information officer for the federal government, tapping into cloud computing services could save an additional five billion per year. She also expressed that as the services continue to grow they will continue to transfer their efforts from redundant systems to improving the citizen experience.

Absent from the announcement was the mention of job impact. Although data centers do not typically employ a tremendous amount of manpower, analysts still believe that tens of thousands of jobs could be displaced or impacted by future shutdowns.

What do you think? Are the closures a good opportunity to reduce the Government’s wasteful spending and taxpayers to save money or is the possible effect on IT professionals too much?

For more information visit The White House’s Blog.

Thanks for Reading and Have a Great Day!

Dustin

ComputerFitness.com

Providing Tech Support for Businesses in Maryland

Tags: Campaign to cut waste, cloud, data, data center, government, shutdown, storage

Posted in Server, Storage | No Comments »

Friday, March 4th, 2011

Last week some Gmail users were faced with an unwelcome surprise. Many Gmail account holders found that their emails were either deleted or temporarily corrupted. Although most users were unscathed by the detrimental “glitch”, those that were not so lucky temporarily lost the majority of their email and access to any crucial information contained within their account.

Since the problem began, most of the clients who were exposed to this issue have regained access to their email account and had their lost emails restored. In a Blog Posting from Ben Treynor, VP Engineering and Site Reliability Czar, he states that Gmail apologizes for the problems and goes on to talk about how the emails were never really lost. The bug had an impact on multiple data centers but because Gmail spreads their data across a vast number of data storage facilities as well as Tape, the emails were never truly deleted.

Tape is a form of offline backup that allowed Gmail to preserve the integrity of data and transfer it back to the data center after resolving the issue at hand. Gmail always keeps redundant copies of the data for easy retrieval in the event that something like this occurs. Gmail originally stated that it was an easy thing to fix but Ben later urged their customers to bear with them because it had taken longer than thought to resolve the issue due to the data transfer process. Following his Monday blog posting Ben Treynor provided an update stating that the flow of data had resumed and all the remaining affected users should now have access to their information.

The root of the problem surfaced when implementing a storage software update. Initially, it was estimated that .08 percent of users were affected but it was later changed to only .02 percent. Even though .02 percent may not be a colossal figure, with nearly 200 million people using Gmail it is still safe to say that a good number of people were left to shoulder the consequences.

If there is one thing that you should take away from this article it is the importance of backing up your data! Data backup is not only important for companies like Google who are protecting client data but for users on a more personal level as well. This issue serves as a perfect example of showing how important your data could potentially be and when you’re unable to access needed information you can be at a major loss. My advice would be to store important emails and information offline just in case you find yourself in a situation similar to the Gmail fiasco.

For more news on all things Google visit Google Headlines and the Google Blog!

Have a Great Day!

Dustin

ComputerFitness.com

Providing Tech Support for Businesses in Maryland

Tags: data, data transfer, Email, gmail, google, mail, software update, users

Posted in Email, Storage | No Comments »

Friday, February 18th, 2011

When it comes to using a personal information manager like Microsoft Outlook it becomes very easy to accumulate too much data. In turn, this build up of information which includes emails, contacts, reminders, or personal notes can often become too much for the system to handle.

Trademark of Microsoft Corporation

As we use Outlook more and more files and folders are created leading to a decrease in the speed of our Microsoft Outlook Center. Stand alone Outlook uses a PST file name extension, also known as a Personal Storage Table file, which is locally stored on your computer. When these files begin to grow they cause Outlook to exert extra effort in order to refresh and open older archived files.

There are several things a user can do in an effort to re-capture the speed of Outlook. Among the options to ensure that Outlook continues to work properly and efficiently is clearing away unused files, disabling add-ins, and removing the RSS feed.

Taking out the garbage:

First it is best to start by going through all of your emails or messages to see which ones you no longer need. Once you are finished deleting the unnecessary files you might already recognize an increase in performance.

Spread out the data:

Once you are finished clearing out the old files it is important to further thin out your folders. In Outlook 2003/2007 you can create a new folder or subfolder by clicking File, New, and New Folder. (In Outlook 2010 Support new folders can be created by going to Home, New Items, More Items, and Outlook Data File.) After establishing new folders reorganize your emails, messages, or reminders so that the information is not all located within the same folder.

Manage your inbox:

Similar to the previous tip, managing your Inbox means that once you are finished reading new messages you should move them to a different folder or delete them. The Inbox folder is the most commonly used folder in Outlook and continuously receives more data. Due to the constant feed of incoming messages the Inbox folder populates the fastest and can bog down the program if left unmanaged.

Consider reducing the security:

Who would have thought too much security would be a bad thing. Your anti-spam preferences take time to sort through emails and slow down your Outlook operations. Although it is not advised to remove your security entirely, it may be possible to lessen your security precautions to provide a faster response time.

Remove the RSS Feed:

If you do not use the RSS Feed, disable it. In order to remove it, access the Tools menu, select Account Settings, RSS Feed, and click Remove.

Disable the Add-ins:

Similar to the RSS Feed you can disable any unused Add-ins by accessing the Trust center found under the Tools drop down menu. Add-ins are good only if you are getting use out of them, to increase Outlook’s performance, disable any of your Add-ins that are dormant.

Backup or Archive your information:

If these options don’t offer much help and you still have way too much data slowing down your Outlook, you may want to consider transferring your files to an external hard drive or setting up an Archive folder for email older than 6 months (click Tools, Options, Other, Auto Archive). Backing up your files is a good idea and could prevent data loss or corruption. In this situation using a backup will not only protect important files it will increase the speed and performance of Microsoft Outlook.

Check out more information on Microsoft Outlook at the Microsoft Outlook Resource Center!

Have a Great Day!

Dustin

ComputerFitness.com

Providing Tech Support for Businesses in Maryland

Tags: boost, data, Email, file, folder, Microsoft Outlook, outlook, speed, speed boost

Posted in Browser Modifications, Software, Web Tips | No Comments »

|